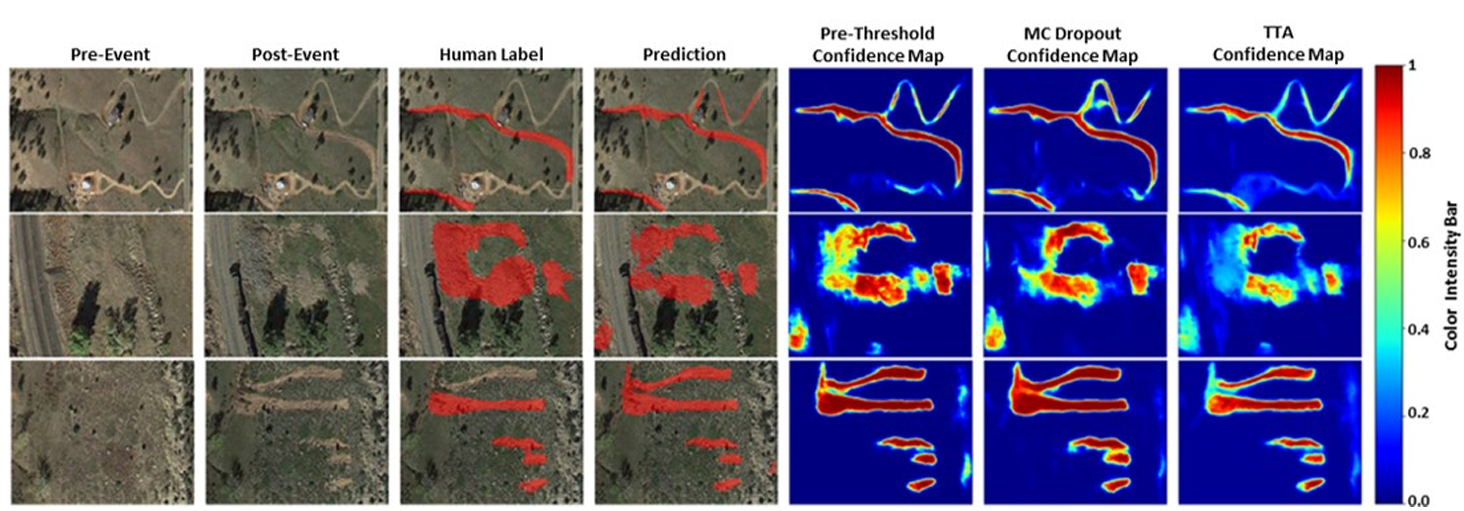

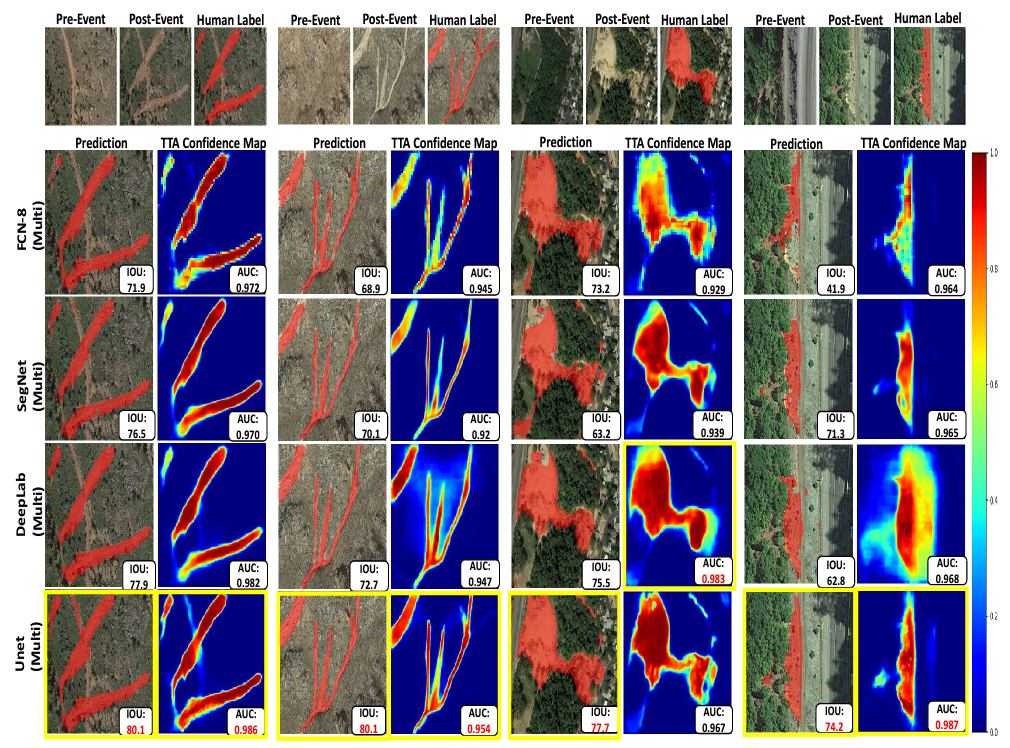

Landslides are a recurring, widespread hazard. Preparation and mitigation efforts can be aided by a high-quality, large-scale dataset that covers global at-risk areas. Such a dataset currently does not exist and is impossible to construct manually. Recent automated efforts focus on deep learning models for landslide segmentation (pixel-labeling) from satellite imagery. However, it is also important to characterize the uncertainty or confidence levels of such segmentations. Accurate and robust uncertainty estimates can enable low-cost(in terms of manual labor) oversight of auto-generated landslide databases to resolve errors, identify hard negative examples, and increase the size of labeled training data. In this paper, we evaluate several methods for assessing pixel-level uncertainty of the segmentation. Three methods tha do not require architectural changes were compared, including Pre-Threshold activations, Monte-Carlo Dropout, and Test-Time Augmentation - a method that measures the robustness of predictions in the face of data augmentation. Experimentally, the quality of the latter method was consistently higher than the others across a variety of models and metrics in our dataset.

@article@article{nagendra2023estimating,

title={Estimating Uncertainty in Landslide Segmentation Models},

author={Nagendra, Savinay and Shen, Chaopeng and Kifer, Daniel},

journal={arXiv preprint arXiv:2311.11138},

year={2023}

}